The Google API leak and E-E-A-T

Rand Fishkin in his article pointed out that the concept of Experience, Expertise, Authority and Trust (E-E-A-T) is a bit blurry, and could be mainly propaganda. The problem with this statement, as we tried to explain in our previous article about the Google API Leak, is that Google uses human quality ratings (including E-E-A-T evaluation) to test the changes to their algorithm before deploying them.

Google has a test set of 15,000 queries with the corresponding pages rated by human evaluators. Every time they experiment making changes to the search algorithm, they test the changes using this test set. The Google search algorithms at later stages of the ranking pipeline use the deep neural network called DeepRank. Essentially DeepRank learns how to make judgements similar to those which human evaluators do. In order to execute this task successfully, it must possess similar information/metrics that human evaluators look at.

We decided to look through the Search Quality Raters Guidelines, and try to map particular parts of the guidelines to the modules and attributes from the Google API Leak. Here is what we found.

“ For example, you may be able to tell someone is an expert in hair styling by watching a video of them in action (styling someone's hair) and reading others' comments (commenters often highlight expertise or lack thereof).”

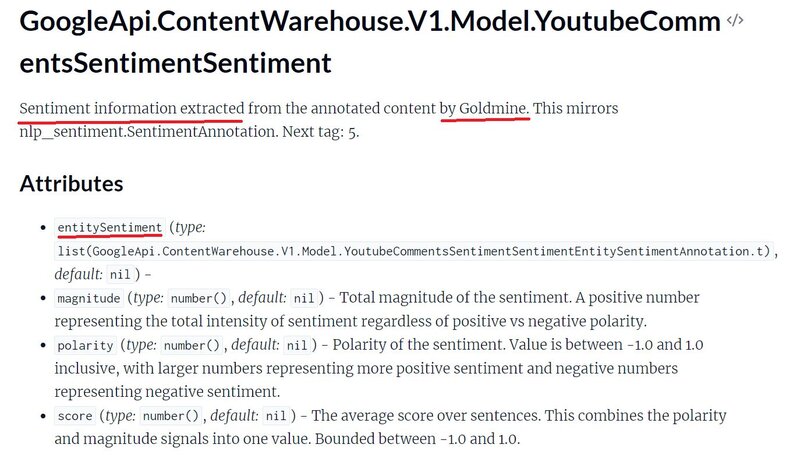

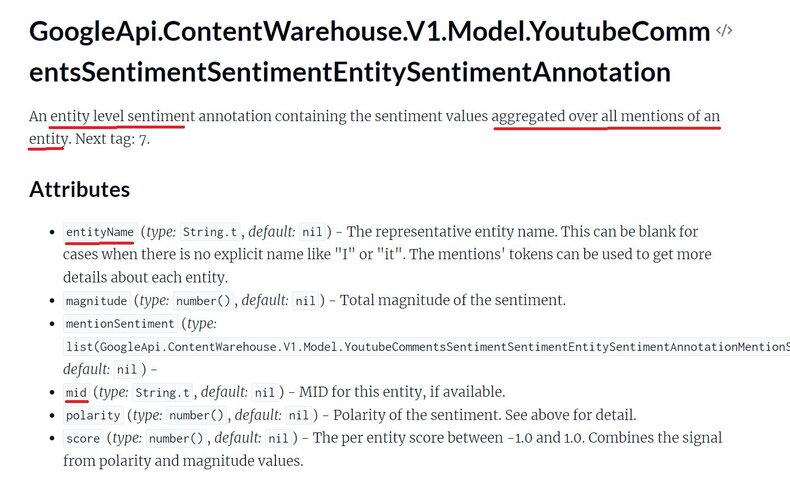

There aren’t many modules associated with Youtube in the API leak, and most of them contain mentions of sentiment and entities. These modules clearly don’t cover all of Youtube’s functionality. It makes us speculate that probably these modules describe how Youtube data is used in Search. In the example above, the sentiment is extracted from Youtube comments, and aggregated for a certain entity. It has the MID field, which stands for Machine ID, a unique identifier of an entity. It’s a reference tied to the database where Google stores Knowledge Graph entities.

“Assess the true purpose of the page. If the website or page has a harmful purpose or is designed to deceive people about its true purpose, it should be rated Lowest .”

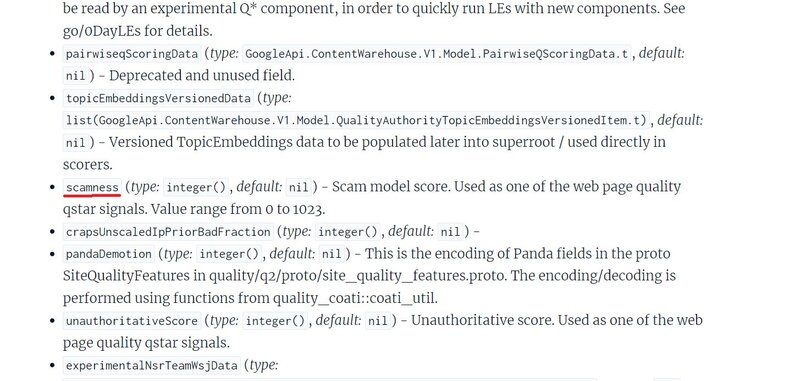

There is the scamness metric, which seems to measure how likely the page is to be a scam, or designed to deceive people.

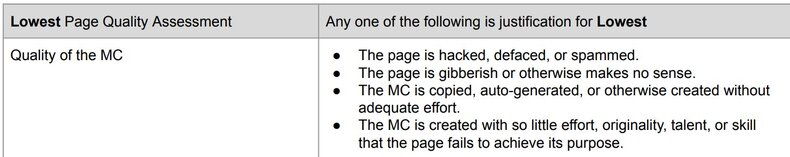

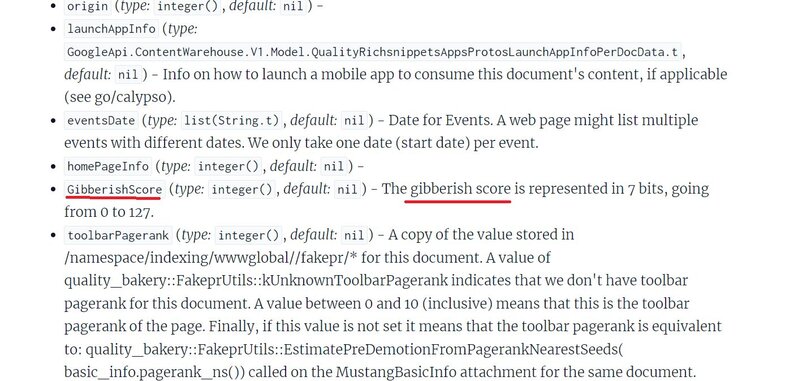

“The page is hacked, defaced, or spammed”

“spamMuppetSignals” field contains hacked site signals.

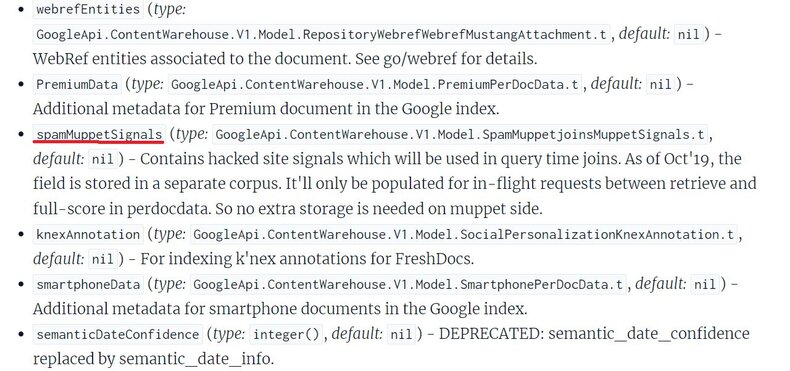

“The page is gibberish or otherwise makes no sense.“

There is the “GibberishScore” for every document

“The MC is copied, auto-generated, or otherwise created without adequate effort.”

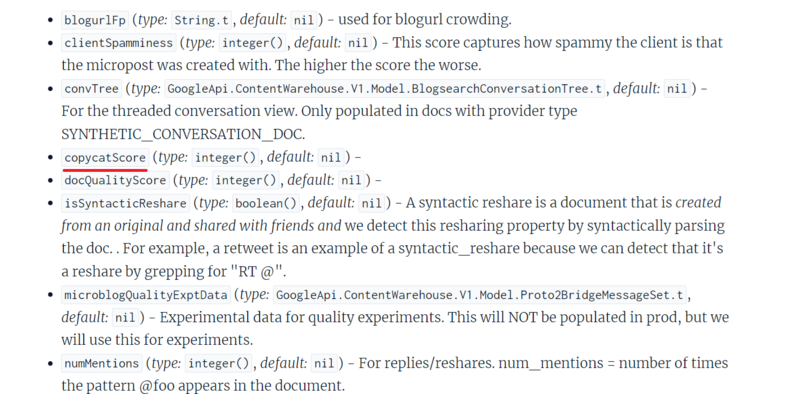

There is the “copycatScore” field inside the BlogPerDocData module. It’s not clear what it does, but may signal that the document was copied from another source

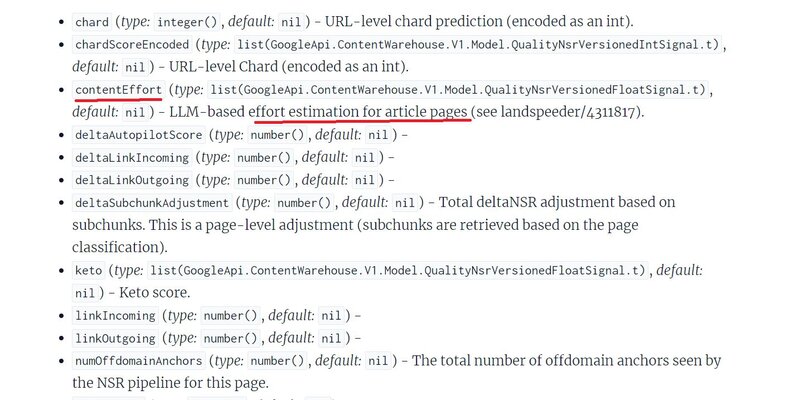

“contentEffort” param estimates the amount of effort required to produce an article page.

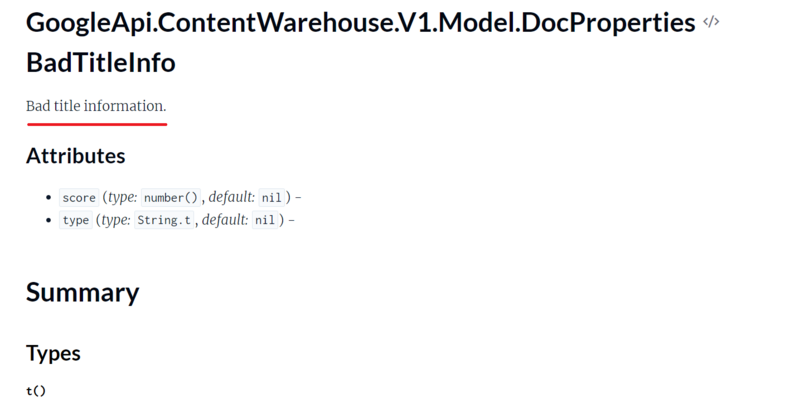

“The page title is extremely misleading, shocking, or exaggerated.”

“DocPropertiesBadTitleInfo” contains bad title information: its score and type.

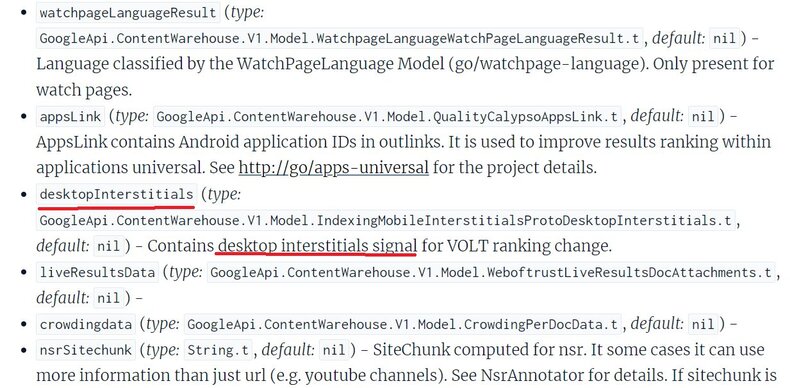

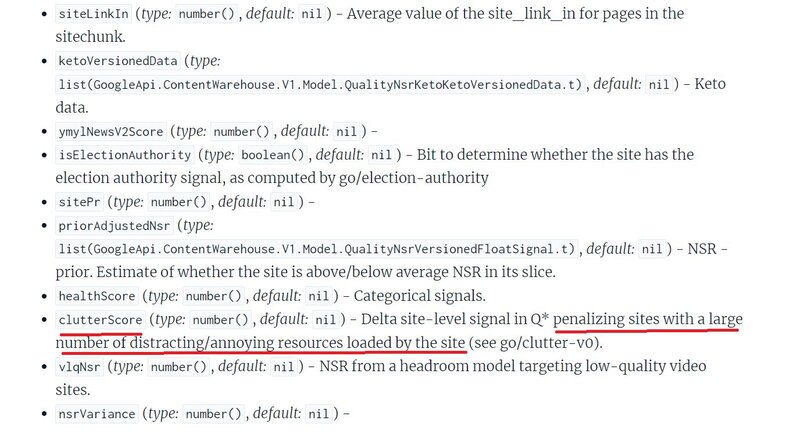

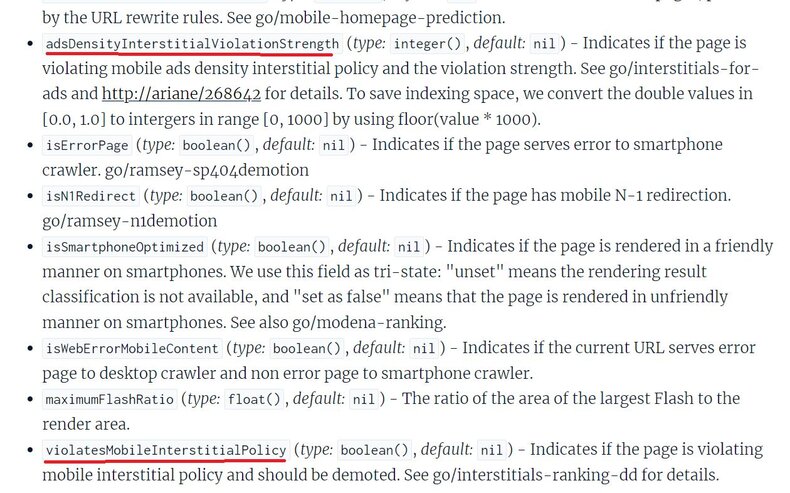

“The MC is deliberately obstructed or obscured due to Ads, SC, interstitial pages, download links or other content that is beneficial to the website owner but not necessarily the website visitor.”

Interstitial is a full screen ad which appears before the page is fully loaded or during a transition to another page.

There are several modules and params related to different types of interstitials. There is also the “clutter score” which penalizes sites with a large number of distracting and annoying resources.

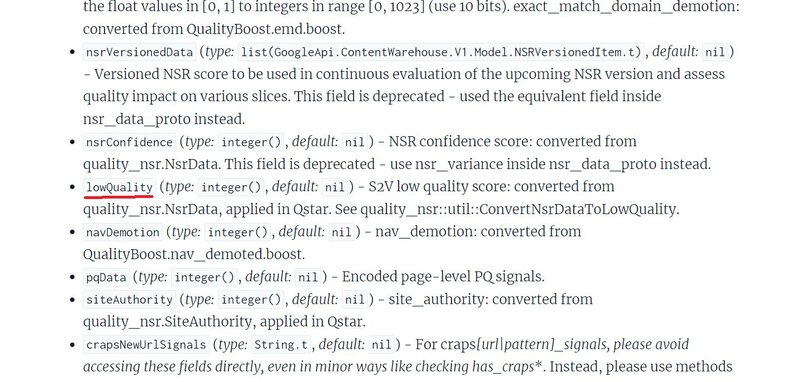

“The page is deliberately created with such low quality MC that it fails to achieve any purpose”

There is the “lowQuality” param which can be used to identify sites with low quality content.

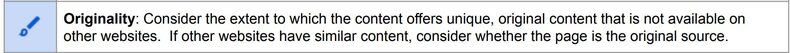

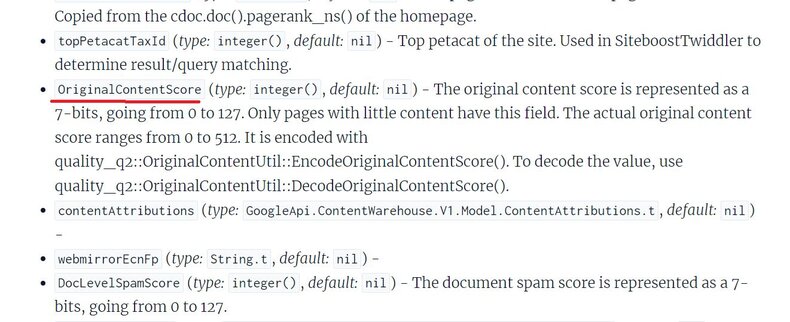

“Originality : Consider the extent to which the content offers unique, original content that is not available on other websites. If other websites have similar content, consider whether the page is the original source.“

Google measures original content score.

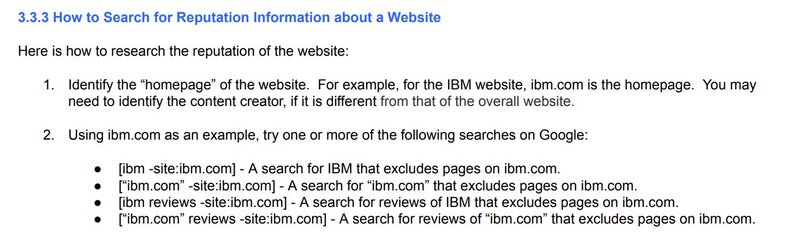

To search for website reputation Google recommends using a query with the following template: to [example.com -site:example.com], and to look for reputable articles and Wikipedia articles about the site. As top pages in search results are likely to be from websites with high PageRank, this recommendation reminds how PageRank algorithm works. If other reputable/high authority sites link to your site, it is deemed to be reputable/high authority as well.

The quality metrics that can be involved into E-E-A-T calculations are mostly contained in the three modules: PerDocData, QualityNsrNsrData, CompressedQualitySignals

PerDocData module contains per document data and has following interesting attributes: spamtokensContentScore, ymylHealthScore, authorObfuscatedGaiaStr, OriginalContentScore,ymylNewsScore, desktopInterstitials, GibberishScore, commercialScore, SpamWordScore.

QualityNsrNsrData module contains site-wide quality signals and has following interesting attributes: ugcScore, titlematchScore, chromeInTotal, videoScore, shoppingScore, nsr, localityScore, tofu, clutterScore, siteQualityStddev, impressions, directFrac, articleScore, ymylNewsV2Score, sitePr, healthScore.

CompressedQualitySignals module contains primarily document level quality signals and has following interesting attributes: ugcDiscussionEffortScore, productReviewPDemoteSite, lowQuality, navDemotion, siteAuthority, authorityPromotion, productReviewPUhqPage, productReviewPPromoteSite, topicEmbeddingsVersionedData, scamness, unauthoritativeScore.

Summary

It seems clear to us that Google is clearly attempting to measure E-E-A-T algorithmically. The training data set is provided by the army of Google Quality Raters and we can see the features that the trained machine learning algorithm is likely using in the API module attributes.

The output from these algorithms combined with the link graph and PageRank scoring can quite easily be used as an algorithmic approximation for E-E-A-T.

Will it be as perfect as a human evaluation? Well possibly not, but it’s an impossible to task to human review every webpage and website, whereas algorithmically these evaluations can be operated at scale to achieve the task.