Google API Leak - Detailed Analysis

What do the leaked Google API docs tell us?

The Google API documentation leak of May 2024 describes different microservices used within the Google Search infrastructure which communicate with each other via protocol buffers. You can think of protocol buffers as an alternative to traditional API calls which are faster and take less space to transfer data. You can think of microservices as small individual pieces, or components, of functionality.

This approach of splitting a large and complex application into multiple small pieces has many benefits. One of them is that many teams can work together on different parts of Google search functionality simultaneously without interfering with each other and consume data coming from different microservices. For example, there may well be teams solely concerned with fighting spam, or trending news, or image search etc.

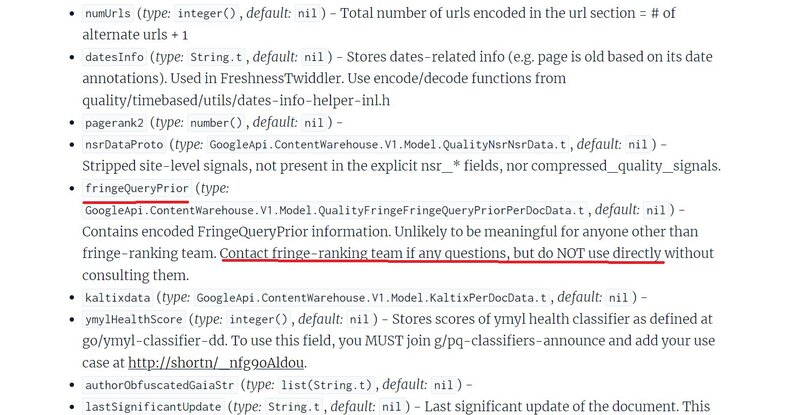

We can see a lot of examples in the documentation, where several fields are used solely by an individual team, such as the fringe-ranking team, or were introduced specifically for a certain project or initiative, for example the Tundra project.

Can we trust this API leak?

Yes, Google has previously confirmed the leak, but even without the official confirmation, a savvy reader could see a lot of overlap between internal system names and specific details used in this documentation and previous Google leaks. We will get back to deciphering some of these names later in the article.

Which signals does Google use for ranking?

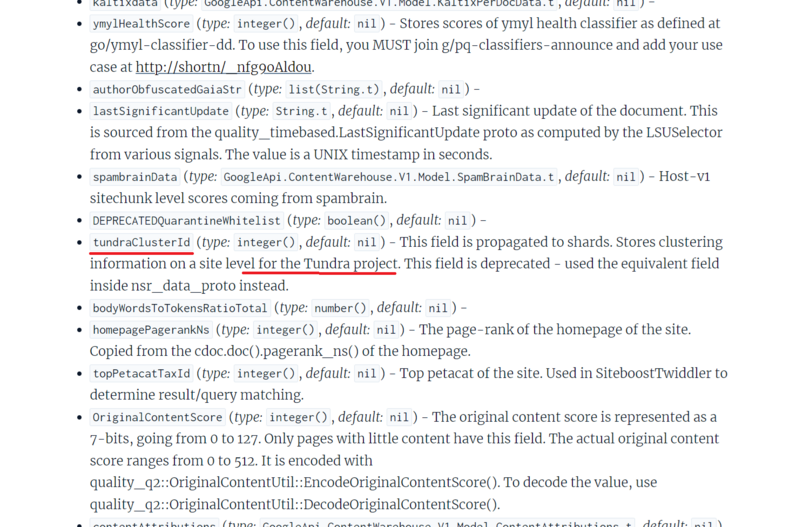

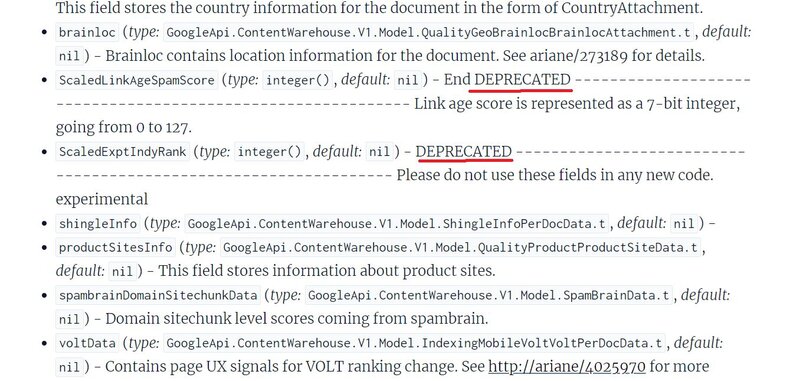

Google teams probably still use most of the fields in the documentation to work on aspects of Google search unless these fields are marked as deprecated.

Enter some text...This deprecated flag must be important. Because this documentation is used by many different teams and most likely by new members on these teams, it’s important to communicate to them that they should not be reliant on any obsolete fields to build new functionality or when experimenting with the data.

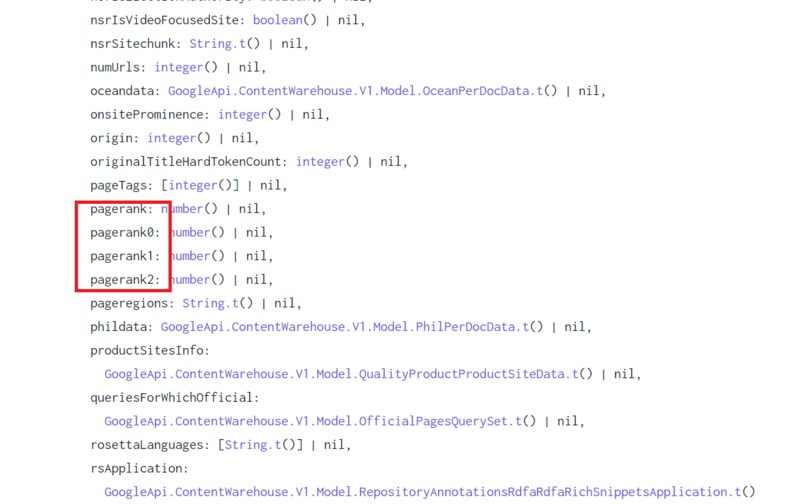

On the other hand, we can see an evolution of different approaches to certain metrics. For example, for PageRank, there are many fields like pagerank0, pagerank1, pagerank_ns (nearest seeds), independence rank, etc.

Let’s take a look at the module PerDocData. It has the following description:

“Make sure you read the comments in the bottom before you add any new field. NB: As noted in the comments, this protocol buffer is used in both indexing and serving. In mustang serving implementations we only decode perdocdata during the search phase, and so this protocol should only contain data used during search.”

This is interesting. It warns against adding any new fields or information to this protocol buffer. Why would this be? Indexing is the process of storing crawled pages in the search index (when parts of this information are updated), and serving seems to refer here to serving search results. Mustang is a Google internal system responsible for serving. Although this module can be used both during indexing (probably adding new info), and serving (actual search), the documentation warns that “this protocol should only contain data used during search”.

Google is famous for its speed. One hypothesis could be that the initial document result set returned from the inverted index after classic keyword matching (BM25) is then enriched with this PerDocData and passed to the ranking stage. Which means the more data we will add, the slower the overall process could be. That’s why it warns against adding the data during the indexing phase which won’t be used during the search phase or serving. It can lead us to a conclusion that most of the fields in the PerDocData module are used in ranking or are important for ranking.

What are the weights of different ranking factors?

The concept of a precise weight of a ranking factor is a bit problematic. The general impression that people have is that each factor has its own weight, and then they are all summed up together to produce some numerical score. In machine learning this technique is called Linear Regression. Though it may work, it’s a rather simplistic model that captures only straightforward relationships. In 2D space this model can be represented as a straight line.

Image source https://www.youtube.com/watch?v=7teudGhdnqo

The more complex models, such as neural networks can capture more complex relationships such as the one displayed in the graph below

Image source https://www.youtube.com/watch?v=7teudGhdnqo

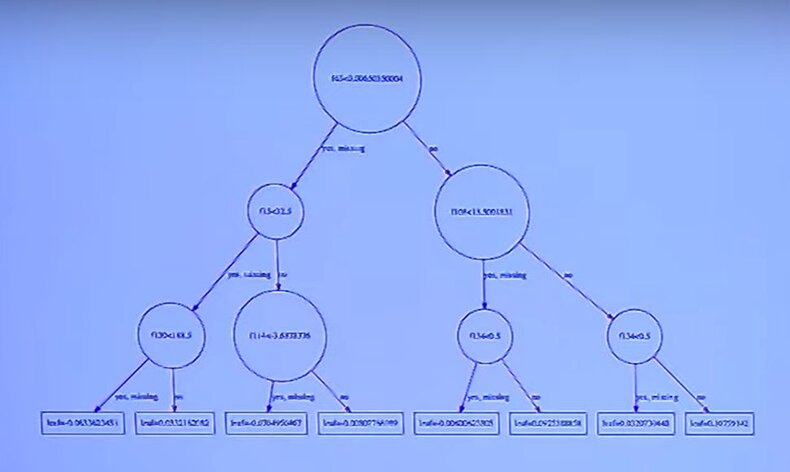

In 2010 Microsoft released Web10K Learning to rank dataset containing 136 search features for each document, and each document was graded by a human rater on a scale from 0 to 5 as being relevant or not relevant for a particular query. It was shown that for this problem decision tree models yield much better results than a simple linear regression. How decision trees work can be seen in the following picture

Image source https://www.youtube.com/watch?v=7teudGhdnqo

If for example, some factor1 is greater than X, rank all documents with factor1 > X as more relevant, and with factor1 < X as less relevant. Then for the more relevant documents on the left take a look at factor2 and threshold Y, and repeat this process unless all documents are ranked.

Of course some factors have much more importance, such as PageRank for example, but for others, it could be the case that under a certain condition a factor is more important, but under another condition that same factor is less important. We cannot say there is a linear relationship between a factor and the rankings.

Similarly, the previous Yandex leak revealed only the initial weights of the ranking factors. These ranking factors were later fed into a machine learning model MatrixNet, and adjusted by it.

Although we don’t know the weights and importance of the ranking factors from the leaked documentation, at least we can get an impression of what features Google uses for ranking. Instead of decision trees, Google uses deep neural networks (DeepRank) at a later stage of the ranking pipeline. The point here is the same: instead of each ranking factor having an exact weight, they work as a group, and have more complex relationships than just linear dependence.

Google API Leak Insights

We’ve covered a lot of insight into Google inner workings already, let’s just briefly touch a few more.

Authors may be important

Google stores information about document authors in a string format.

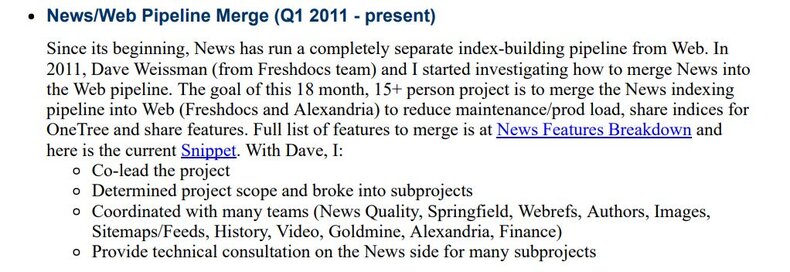

Though we don’t know exactly how this information is used, there is some evidence that this information could be important. Below is an excerpt from the leaked Paul Haahr resume, stating that he “coordinated with many teams (News Quality, Springfield, Webrefs, Authors, Images, Sitemaps/Feeds, History, Video, Goldmine, Alexandria, Finance)”

Webrefs are related to web entities, Goldmine is related to annotations, Alexandria is an indexing system, so all these teams seem to represent different aspects of search, including Authors. If there is/was a whole team of people at Google solely concerned with Author attribution, it may indicate that Google deems this information to be important.

Some people say that E-E-A-T is only a concept for quality raters, and Google doesn’t use it much. I don’t think so. Google has a test set of 15,000 queries along with the urls returned for these queries. Each url is ranked by a human rater (has an IS score). When an engineer comes up with an idea how to improve rankings, they test what new results this new algorithm returns. Usually the change just slightly alters the order of results and for these results there is already an IS score. In case the new algorithm surfaces new results, Google team sends them to quality raters to assign an IS score. This way Google engineers can compare the old algorithm results with the new algorithm results. It’s a very quick and cheap process. In any given year Google does more than 600,000 such experiments.

These IS ratings are a measure of the quality of an algorithm. In other words, the algorithm tries to match or approximate these ratings. In order to do it well, the algorithm should have features or signals to quantify (measure numerically) E-E-A-T, because human raters take it into account while producing ratings.

What's the takeaway here? Read Google Search Quality Raters Guidelines, it’s important.

What is NSR?

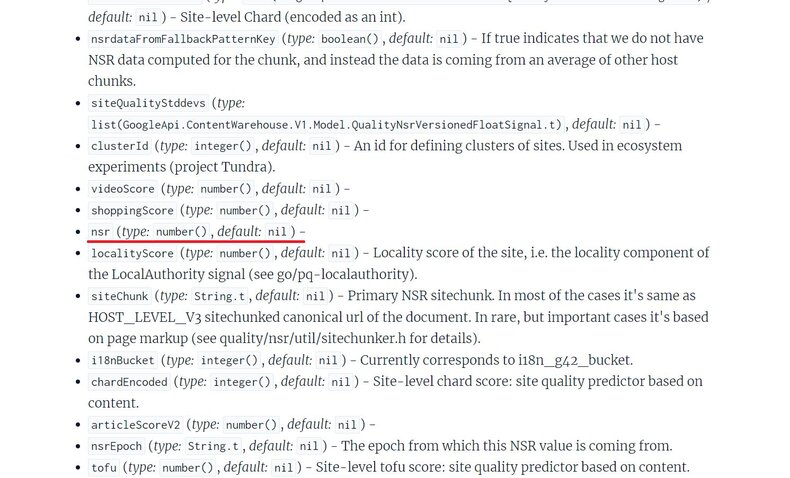

Mike King in his article posed a question: what does NSR mean? Well, NSR is site ranting, some type of quality measure for the site.

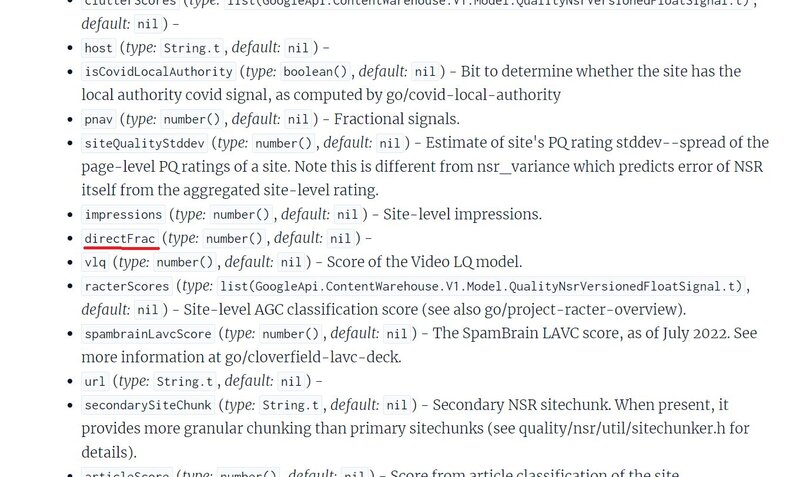

There are a lot of quality related metrics for the site grouped under the QualityNsrNsrData module. One of them is “directFrac”. I wonder if it could mean the fraction traffic to a site that is direct traffic.

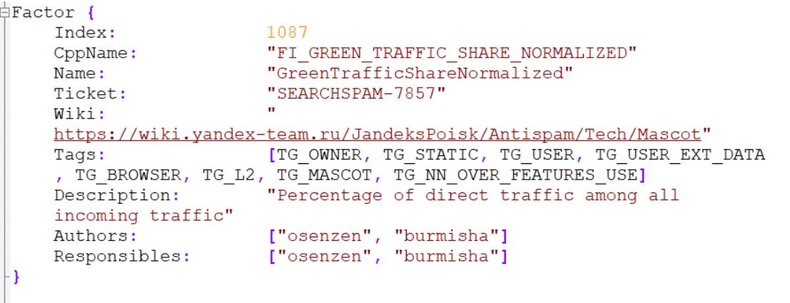

Yandex has a similar ranking factor “GreenTrafficShareNormalized”, which is the percentage of direct traffic among all incoming traffic.

If you think about it, it makes sense. If organic users return to your site, it means that they find it useful and probably good quality. If there are a lot of direct visits, it means users know about the site without the need to search for it and the site has high authority.

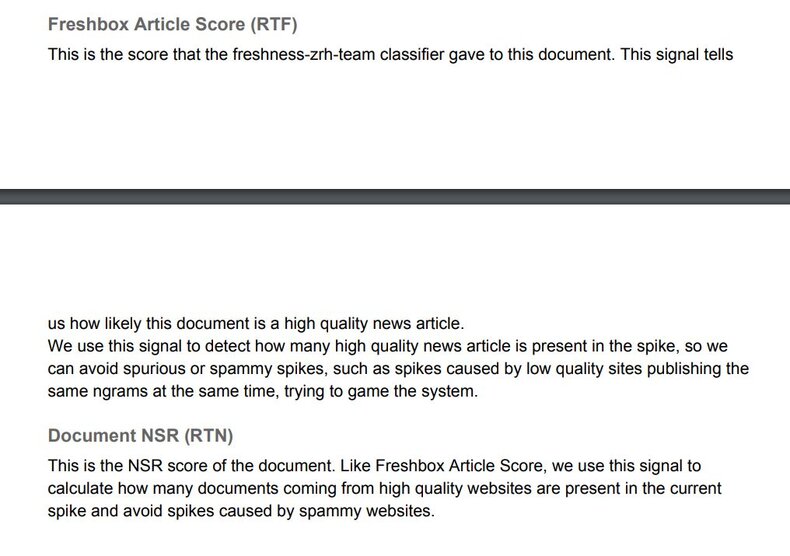

Anyway, we can find more information about NSR in the previous Google leak regarding “Realtime Boost”:

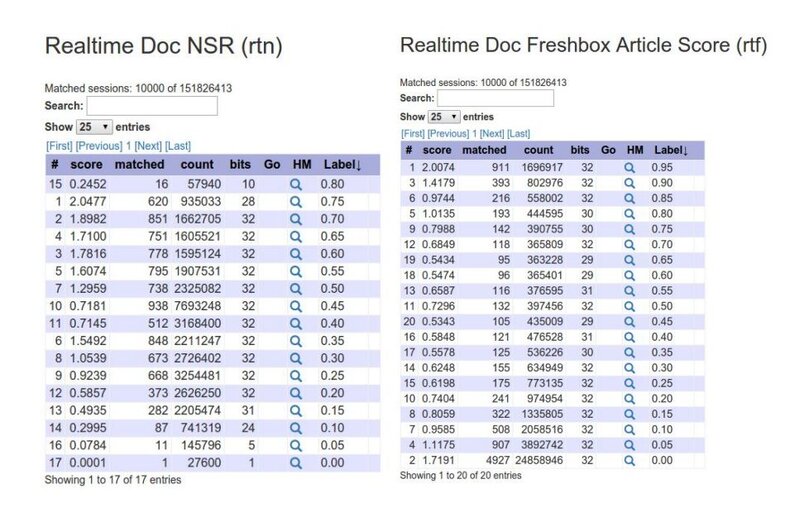

“The NSR (site rank score) and Freshdocs Article Score both shows that most documents were high quality news:”

With realtime boost, Google was trying to detect spikes in queries and new articles published after breaking news to show the most fresh articles on the first page.

They used two metrics: Freshbox Article Score and NSR to determine results coming from high quality websites. Freshbox is related to Google News. Freshbox Article Score helps to determine high quality news websites, and NSR to determine high quality websites (in general). The slides also indicate that Google can measure NSR in real time.

As there are a lot of site-wide quality metrics grouped under QualityNsrNsrData module, NSR could be a very important ranking factor.

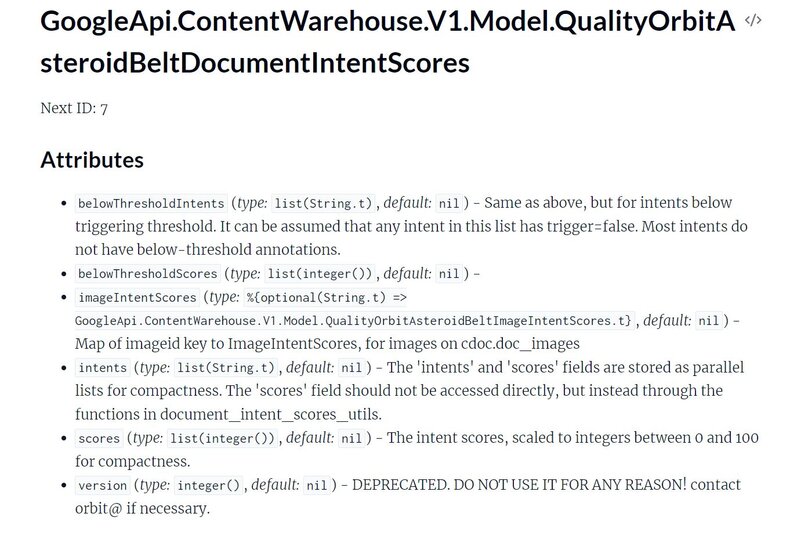

Search Intents

There is a field called “asteroidBeltIntents” inside the PerDocData module, which contains a list of intents for the document alongside with the corresponding scores (which may indicate a confidence score). This data also has image intents for every image on the page.

Here Google uses search intents in a broader sense than the de facto standard in the SEO community. During the DOJ court trial, Pandu Nayak named 3 examples when the search intent is different for the same query on different devices or in different locations.

In the first example, the query “football” will obviously trigger different results in the US compared to the UK.

In the second example, for the query “Bank of America”, users on desktop will be served with the results about online banking, and on mobile with the map, and the locations of ATMs.

In the third example, for the query “ Norton Pub”, desktop users want to see Norton Publishing Company, whereas mobile users want to find a pub named Norton.

Navboost click data help to distinguish between these intents. Navboost is a signal used for ranking web results (10 blue links). There is another system called Glue, which some people mistakenly call the same thing as Navboost. Glue deals with other search features on the page. It analyzes clicks, hovers, scrolls, and swipes to determine when and where these other search features should appear on the page.

Prof. Edward Fox: “In simpler terms, Glue aggregates diverse types of user interactions—such as clicks, hovers, scrolls, and swipes—and creates a common metric to compare web results and search features. This process determines both whether a search feature is triggered and where it triggers on the page.”

Using Glue signals, another system called Tangram (formerly Tetris) assembles search engine results pages (SERPS), determining which other search features to display (Top stories, Images, Videos, People Also Ask, etc) and where to display them on the page.

This may indicate that Google has a very granular search intent classification system, and can combine results for multiple search intents on one page.

Scratching the surface

This analysis is just a starting point for delving deep into what we can learn from these leaks.

Additional areas (among others) where we can see there are potential insights we can glean include:

- Links

- Topicality

- Entities

- Local SEO

Look out for further deep dives as we spend more time analysing these documents.

In Summary

By combining the recent Google API documentation alongside the recent US court submissions and other related Google leaks we have managed to gain a much clearer insight as to how the Google search algorithm works and what data and systems it relies on.

However, it’s key to understand that there is still much that we don’t know, which means that we must be careful before making any definite conclusions, there is much still to test and learn from, but we do at least now know more of the correct areas and data points to be exploring.