How Google Search Works: Inverted Index, RankBrain, RankEmbed, DeepRank, Twiddlers

Last updated: June 11, 2025

In this article we will explore how Google Search works at a higher level and what are internal Google systems used during the retrieval and ranking stages of search.

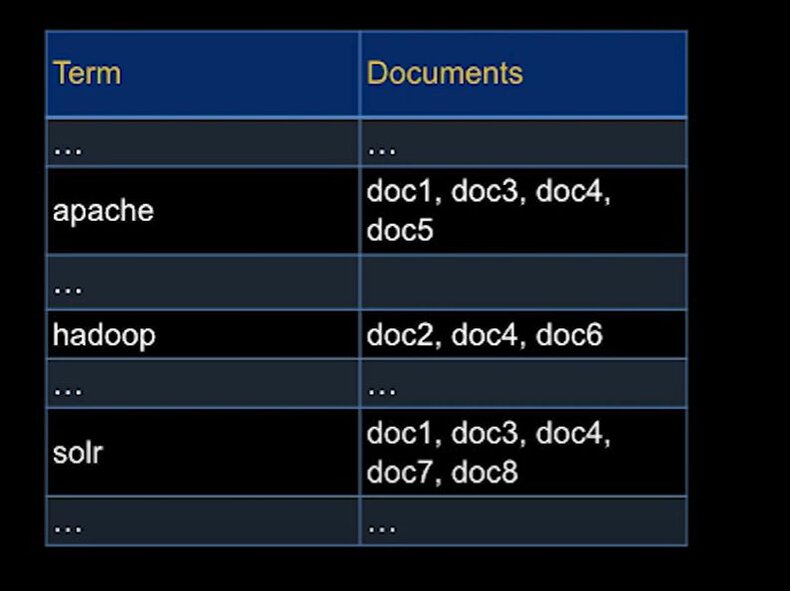

Inverted index

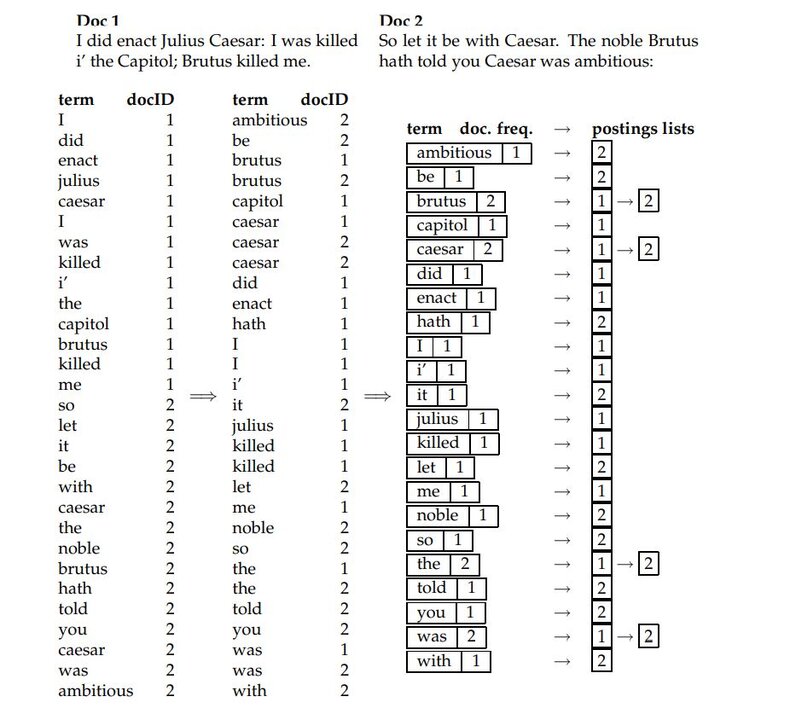

After crawling and rendering of a web page is done, its content is split up into words. After that, the most common words (such as articles, etc), called stop words, are filtered out. The remaining words are put into a data structure called an Inverted index. In the inverted index, each word in a vocabulary is linked to a corresponding list of all document ids of all the documents that contain this word on a page. This special list is called a posting list.

Image source https://www.youtube.com/watch?v=4fMZnunTRF8

This is similar to an index at the back of a book. For every term in the book, there are corresponding page numbers for a quick lookup. Inverted indexing is based on the same idea. It’s a very efficient and fast data structure, and makes search through trillions of documents very fast. In computer science, this algorithm is said to be run in constant time, or have O(1) complexity.

Despite being fast, this simple form of retrieval called Boolean retrieval (whether a document contains a keyword or not) doesn’t tell anything about the rankings of documents (which documents are more relevant), and may return thousands of loosely related documents. Therefore we need another metric on top of that.

TF-IDF and BM25

The initial idea is that if a certain word is repeated multiple times (Term Frequency or TF) in the document, then it is probably a good description for the document. But not all words are equal. For example, the term “SEO” used in a document has a more specific meaning, or can tell more information about the content of a document than words like “meeting” or “management”. To measure the importance of a term, researchers came up with another metric called Inverse Document Frequency (IDF). It’s the number of all documents in the corpus divided by the number of all documents containing a certain word. Because the resulting number could be quite high, then we should apply the LOG() function after the division. Multiplication of TF with IDF, called TF-IDF, is a good initial estimator of the importance of a keyword/term in the document. There is a fancy math formula for calculating TF-IDF, which also normalizes TF with the document length, called Okapi BM25. Historically, it’s the most popular metric of keyword importance in the document, and serves as a baseline in information retrieval research.

Image source: Manning. Introduction to Information Retrieval

While TF-IDF and BM25 allow us to quickly search billions of documents and provide initial relevance scoring, they cannot understand semantics of the text, relationships between words, synonyms, etc, and render relatively poor performance by modern standards.

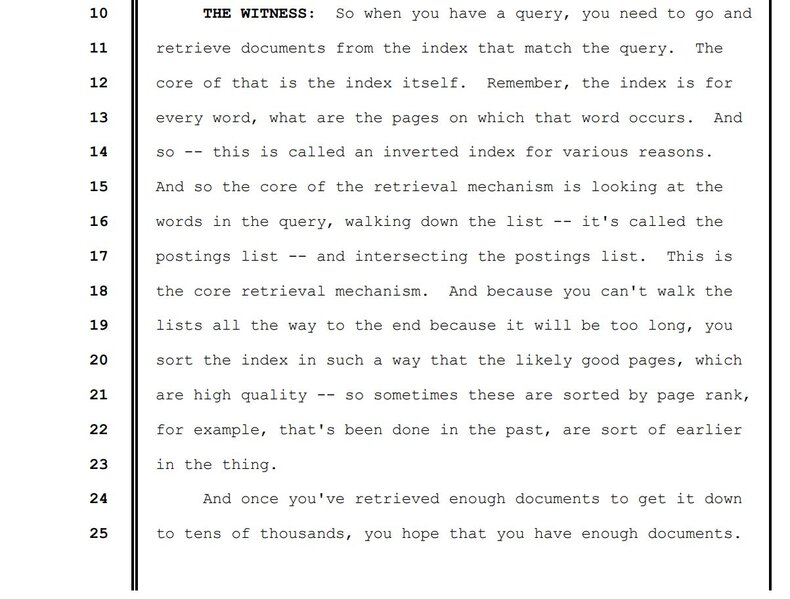

Here is how Pandu Nayak, VP of Search at Google, characterized the Google retrieval process during the DOJ court hearings

“THE WITNESS: So when you have a query, you need to go and retrieve documents from the index that match the query. The core of that is the index itself. Remember, the index is for every word, what are the pages on which that word occurs. And so -- this is called an inverted index for various reasons. And so the core of the retrieval mechanism is looking at the words in the query, walking down the list -- it's called the postings list -- and intersecting the postings list. This is the core retrieval mechanism. And because you can't walk the lists all the way to the end because it will be too long, you sort the index in such a way that the likely good pages, which are high quality -- so sometimes these are sorted by page rank, for example, that's been done in the past, are sort of earlier in the thing.

And once you've retrieved enough documents to get it down to tens of thousands, you hope that you have enough documents. So this is the core of the retrieval mechanism, is using the index to walk down these postings lists and intersect them so that all the words in the query are retrieved

THE COURT: And the ranking is done only after you have culled it to the tens of thousands?

THE WITNESS: Exactly. So that's -- the next phase is to say, okay, now I've got tens of thousands. Now I'm going to use a bunch of signals to rank them so that I get a smaller set of several hundred. And then I can send it on for the next phase of ranking which, among other things, uses the machine learning.”

Google uses topicality signals, page rank signals and localization signals to narrow down tens of thousands of documents to a top few hundred (this step is performed by the core ranking systems), and then these hundreds or even dozens of documents are passed to deep learning systems to generate additional signals.

Google has 3 deep learning models in place: RankBrain, RankEmbed and DeepRank. Deep learning means neural networks with many hidden layers. That’s why they are called deep. Historically, RankBrain was developed first, and launched in 2015. Later Google has added RankEmbed for document retrieval and DeepRank for ranking.

RankEmbed

In their blog post How AI powers great search results, Google lists AI systems used in search. RankEmbed corresponds to Neural matching in this article. Here is what Google said in their article:

“But it wasn’t until 2018, when we introduced neural matching to Search, that we could use them to better understand how queries relate to pages. Neural matching helps us understand fuzzier representations of concepts in queries and pages, and match them to one another. It looks at an entire query or page rather than just keywords, developing a better understanding of the underlying concepts represented in them…

This level of understanding helps us cast a wide net when we scan our index for content that may be relevant to your query.”

Basically, it refers to a concept of embeddings, when documents are represented as multidimensional vectors, and queries are encoded as vectors in the same space. If two vectors have similar meaning, then they are located closely in that space(or more accurately they have a small angle between them).

These document embeddings are most likely stored in another type of index, different from Inverted index, to support vector search (Approximate Nearest Neighbors). Vector search based on embeddings is slower than traditional Boolean or TF-IDF retrieval, but is still much faster than deeper neural network predictions.

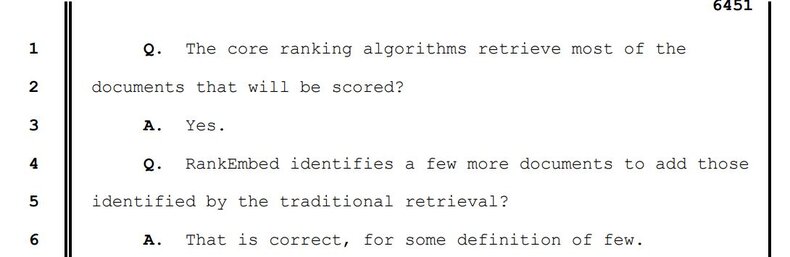

Here is what Pandu Nayak said about RankEmbed during the DOJ court trial:

“A. Other than, as I said, the RankEmbed thing also does retrieval.

THE COURT: I'm sorry, you said RankEmbed?

THE WITNESS: Yes. RankEmbed bought one of those deep learning systems that also does retrieval.“

“Q. RankEmbed identifies a few more documents to add those identified by the traditional retrieval?

A. That is correct, for some definition of few.”

RankBrain

RankBrain was initially launched in 2015 to improve Google’s understanding of long tail queries. Here is what Google wrote about RankBrain on their blog:

“understand how words relate to concepts. Humans understand this instinctively, but it’s a complex challenge for a computer. RankBrain helps us find information we weren’t able to before by more broadly understanding how words in a search relate to real-world concepts. For example, if you search for “what’s the title of the consumer at the highest level of a food chain,” our systems learn from seeing those words on various pages that the concept of a food chain may have to do with animals, and not human consumers.”

Before RankBrain, Google struggled to return good results for some hard long term queries. In the example above, with the traditional TF-IDF retrieval, after removing the stop words, the query would be translated into something like “title consumer high level food chain”. If these words are scored individually, TF-IDF is likely to retrieve documents about human consumers, as consumer is the most descriptive term (highest IDF value) in the query. Another approach could be to evaluate n-grams (sequence of words). For example, trigrams, and to look for exact matches of “title consumer high”, “consumer high level”, “high level food”, and “level food chain”. Still none of these would yield appropriate results.

That’s why there was a need for using machine learning to better understand long queries. Neural networks could determine that the “consumer” here is related to animals, rather than humans. As a result, RankBrain is able to retrieve documents about “apex predators”.

Pandu Nayak confirmed that DeepRank is taking on more of the functions of RankBrain now (though RankBrain is still in use):

“Q. And at the top there -- of that bullet it says: "Does DeepRank replace RankBrain? No, complementary strengths." Do you see that?

A. Yes.

Q. And you agree with that?

A. Yeah, I mean, I think that's not an unreasonable position, though over time they are becoming less complementary. At the time that this was done, maybe they were more complementary, but DeepRank is taking on more and more of that capability now.”

DeepRank

DeepRank is a more sophisticated ranking model based on the transformer architecture. It is more expensive to run in terms of latency (speed of generating and returning SERP). DeepRank is based on BERT, and is able to understand the meaning of a document. Because it is slower, and Google cares hugely about speed, DeepRank is only used at the last stage for the final 20-30 documents retrieved by the previous systems.

From the DOJ trial:

“Q. And then it says: "DeepRank understands language and has common-sense." Do you see that?

A. Yes.

Q. And those are two different things. The ranking part and the understanding language part, those are two separate things, right?

A. The understanding language leads to ranking. So DeepRank does ranking also.”

“Q. And these are machine learning systems, the ones we've talked about, right?

A. Yes.

Q. And then it says -- a little bit farther down in the middle of the next paragraph -- actually, let's stay there. The next sentence says: "DeepRank not only gives significant relevance gains, but also ties ranking more tightly to the broader field of language understanding."

“Q. What does that mean, computationally expensive?

A. So training -- so DeepRank is this bold model which uses these transformers which looks at the sequences of things. And it needs to look at -- it turns out training transformer models is more expensive than training straightforward feedforward networks like Google Brain or RankBrain. Those are cheaper to train. And so you need -- it's more expensive in that sense, more computation is required. Because, in a sense, it's solving a harder problem.”

To make an analogy, DeepRank is something like a baby version of ChatGPT, specifically tailored for ranking. After creating DeepRank, Google has come up with other more powerful models like MUM (Multitask Unified Model). But Google doesn’t use these models in production, because they are too slow, and unable to meet Google’s latency standards.

Every LLM is essentially a black box. Interestingly enough, Google was very smart not to hand all its ranking functionality to this black box. Google admitted that despite DeepRank bringing relevance improvements, deep ranking models cannot completely replace other algorithms, such as Navboost (based on clicks data).

From the DOJ trial:

“Q. And then the next paragraph begins: "Consequently, DeepRank seems to have the capacity to learn the understanding of language and common-sense that raters rely on to guesstimate relevance, but not nearly enough capacity to learn the vast amount of world knowledge needed to completely encode user preferences." Do you see that?

A. I see that, yes.”

After the initial ranking is done, it’s time to do all sorts of small rerankings to refine it. This is done with the help of Twiddlers.

Twiddlers and Reranking

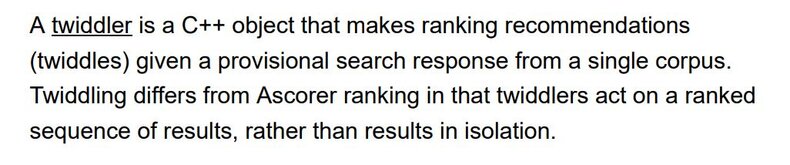

Twiddler is a component responsible for reranking results after the initial preranking is done. There are dozens of independent Twiddlers, each trying to optimize certain signals. Every Twiddler makes a recommendation for promoting/demoting or filtering certain results. At the end, all these recommendations are reconciled by the Twiddler framework.

Twiddlers are a relatively easy and flexible way of changing the initial rankings for different purposes. They allow ease of experimentation. Rather than changing the core ranking functionality (done by the Ascorer component, and containing 1400 jobs), one can just write a Twiddler for a specific use case.

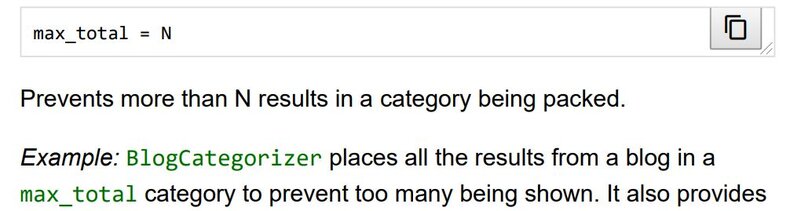

Twiddlers allow engineers to change the initial IR (Information retrieval) score, promote a result above some other result (position), demote a result ( for example to a second page) or filter results. Google also has different categories for web results, and Twiddlers can limit the number of results per category (for example, for blog posts).

Here is an example from the Paul Haahr previously leaked resume that states he was responsible for writing a Twiddler promoting domain diversity:

What is RankBrain?

RankBrain is a deep learning system developed by Google to better match documents with complex long tail queries.

What is RankEmbed?

RankEmbed is an internal system used by Google to retrieve documents relevant to a query. It uses word embeddings in multidimensional space to evaluate semantic similarity between query and document.

What is DeepRank?

DeepRank is a deep learning system used by Google for ranking documents. It understands the meaning of documents and has common sense. Due to its computational complexity DeepRanks is only applied to a handful of search results (final 20-30 documents).

What the difference between RankBrain and BERT?

BERT is a general purpose large language model based on the transformer architecture. RankBrain is an internal Google system for ranking search results. BERT understands the language, RankBrain ranks documents based in part on language understanding.

What the difference difference between RankBrain and neural matching?

RankBrain is a ranking system which helps Google to better understand more complex long tail queries. Neural matching is used in another Google system called RankEmbed for document retrieval. RankEmbed uses document embeddings and vector search to retrieve relevant documents.

What is a Twiddler in Google Search?

Twiddler is a lightweight mechanism for reranking results after initial ranking by Google core algorithm is done. It is used to variety of purposes: promoting domain diversity, boosting blog posts, etc

Does Google use BM25?

It is likely that Google uses BM25 or similar technique for retrieving documents from its inverted index. BM25 is often used as a benchmark for information retrieval tasks, and considered to be a good baseline metric. AI startup Perplexity, competing against Google in the search space, has confirmed that they are using BM25 for retrieving documents from their index.

What is eDeepRank?

eDeepRank is an LLM system used by Google to decompose LLM-based signals and make them more transparent. In other words, it tries to make AI signals interpretable and explainable.