Why is Google using Click Data

Google and click data

One of the bigger revelations from the leaks is that Google is clearly using real user click data taken from the billions of users worldwide of its Chrome browser product.

This clickstream data gives huge insights into how users are interacting with websites.

In addition, Google also clearly has user click data from interactions with the SERPS.

Why is Google reliant on clicks?

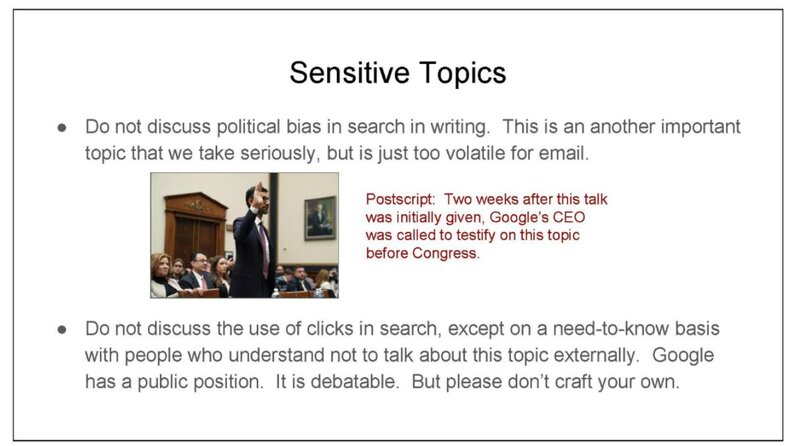

Despite numerous evidence that Google uses click data, Google representatives continued to vehemently deny this fact. There was an internal policy/recommendation at Google not to speak about sensitive topics, such as clicks: “Do not discuss the use of clicks in search… Google has a public position. It is debatable. But please don't craft your own.”

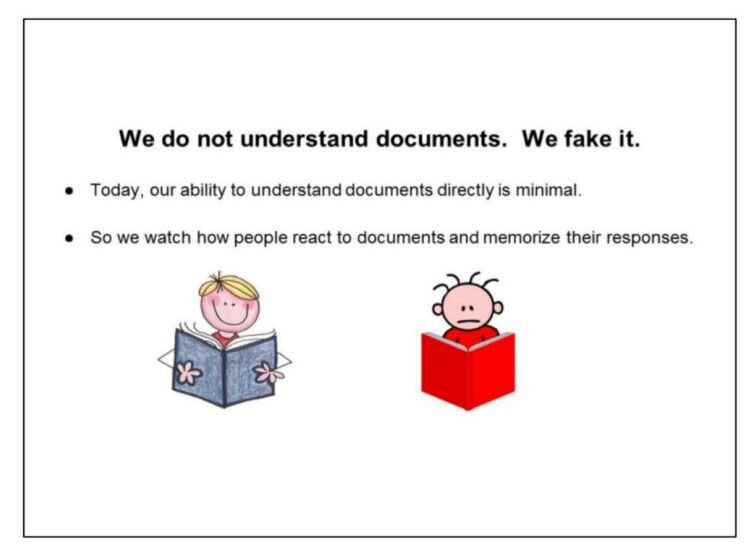

This public position could be the result of the fact that previously (around 2016) Google was very poor at understanding the meaning of documents, and relied heavily on click data for relevance judgements. This is a slide from 2016 presentation:

Now Google is able to understand the meaning of documents, but still user behavior data is the best way to tell how satisfied real people are with the search results.

Predicting clicks

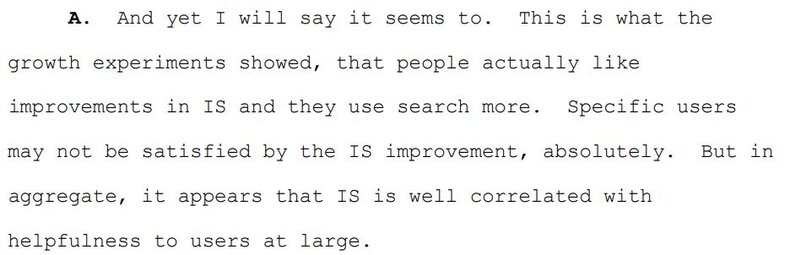

We talked about user satisfaction being important, but why is that? Let’s look at what Pandu Nayak said during the DOJ court trial: “And yet I will say it seems to. This is what the growth experiments showed, that people actually like improvements in IS and they use search more. Specific users may not be satisfied by the IS improvement, absolutely. But in aggregate, it appears that IS is well correlated with helpfulness to users at large.”

Meaning that when users are satisfied with the search results, they tend to use search more. More time spent on Search, means more ads displayed, and more revenue for Google.

Now, the question is how can we measure user satisfaction automatically? One way of doing it is when a user clicks on a search result for a particular query, regard it as the result being relevant. If they stay on the page for some time (called a long click), this can be regarded as a page being relevant to their information needs. So we can quantify page relevance for a given query by looking at clicks, or rather Click Through Rate (CTR), and quantify user satisfaction by time spent on the site after a click (a long click).

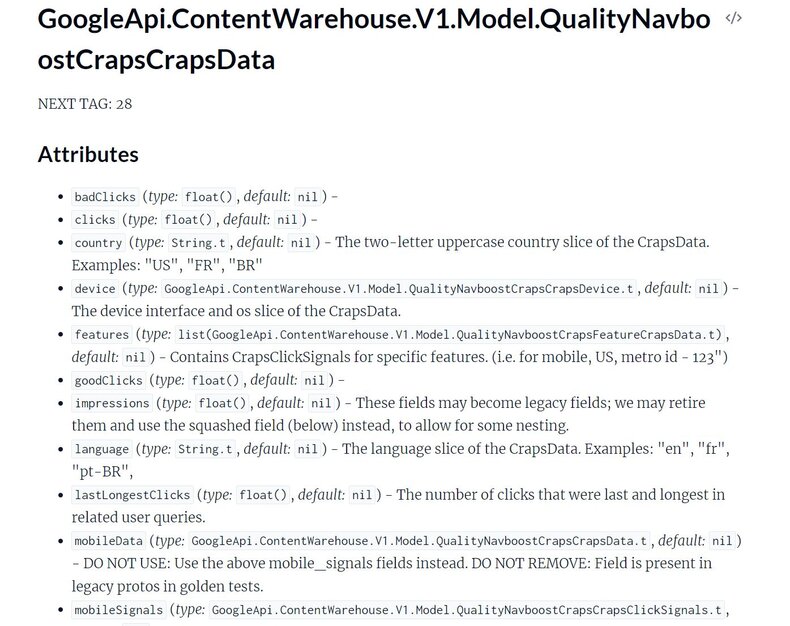

The QualityNavboostCrapsCrapsData module has a param called “lastLongestClicks”: “The number of clicks that were last and longest in related user queries.”

This may have a similar idea to long clicks. if it was the last click, and a user spent the longest time on the page among all previous queries, then they may have found an answer to the question they were looking for.

Let’s take this example. If a result #3 is constantly getting more clicks than results #1 and #2, we can say result #3 is more relevant. CTR of result #3 is the same thing as the probability of a click on that result.

The higher the CTR, or probability of a click, the more likely users will be satisfied with the result. Now imagine that we have a new query for which we don’t have click data. Having all historical data for previous queries, urls and clicks, we can attempt to build a machine learning model which would predict the probability of a click on a given url for a new query. Then we will be able to rank/sort the results by the predicted probability of a click, or predicted CTR.

In other words, the probability of a click would serve as a proxy metric for ranking documents. The higher the predicted CTR, the higher we should put a document in a ranked list.

The question is whether we will be able to build such a model, and whether it will replace human raters and clicks data? This same question Google engineers asked themselves in the 2016 Google Presentation Unified Click Prediction.

Google concluded that showing results that users want to click on is close to its goal. They can do this prediction extremely well by looking at trillions of examples of user behavior. But it’s slightly more complicated than just predicting clicks, as just predicting clicks will result in promoting low-quality, click-bait results, promoting the results with genuine appeal that are not relevant and demoting official pages etc.

Google recognized that it’s a very hard problem to solve, as it essentially emulates the behaviour of a human searcher, a problem compared to passing a Turing test (it was believed that passing a Turing test shows that a machine has intelligence, and deemed to be impossible at a time).

Using human quality ratings data for modeling user behavior can be compared to a very blurry, low resolution image.

Using click data, on the other hand, produces a very clear and sharp image, and allows Google to identify new behavior patterns, which may not be present in quality ratings.

AI is now able to pass the Turing Test, and Google has much more capacity nowadays. But as it was mentioned before, Google admits that “DeepRank seems to have … not nearly enough capacity to learn the vast amount of world knowledge needed to completely encode user preferences”. Google could add more and more neurons to these models, but it would mean more execution time, and increased latency. As an example, if you ask GhatGPT if a particular text is relevant for a certain query, it would probably take it a couple dozens of seconds to reply. For Google the response time is sub second.

Imagine Google built this monstrous AI system to predict user behavior. It wouldn’t solve the problem completely as 15% of all queries that Google sees every day are new queries that it never saw before. So Google would need to retrain this AI every day with this fresh data and it’s click data. Either way Google will continue using click data as it’s impossible not to do so with the current state of technology.

In conclusion

People usually say that SEO is based on 3 pillars: content, technical SEO, and backlinks. Now is a good time to add the fourth pillar: user interactions (clicks, dwell time, etc).