Google Core Ranking Factors: Relevance and Page Quality

Relevance vs Page Quality

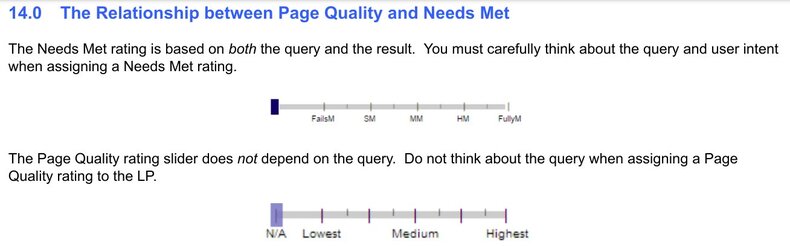

Google cares most about user satisfaction. It has an internal metric called IS score, given by human raters for each search result. This is most likely a combination of Needs Met (page relevance) rating and Page Quality rating assessed by search quality rates during search quality evaluations.

Needs Met is a query dependent metric (how well a page matches a query), and Page Quality is a query independent metric (how much effort went into creation of the page). Traditional Information Retrieval is only concerned with relevance, how well the results match the query. This is because the results returned are deemed to be uniform in quality, for example, from an ecommerce site, or from some blog, or educational site, etc.

So why did Google introduce this other query independent metric called Page Quality and ask raters to assess it from Lowest to Highest? The short answer is Spam.

The History Behind Page Quality Metric

The story of Google started with PageRank which was an algorithm designed to fight spam. At the time, webspam was rendering other search engines results useless, and PageRank was used as an easy way to assign quality scores to web pages. PageRank was harder to manipulate by individual webmasters and spammers. Since then there has been a constant cat and mouse game between Google and link spammers.

Let’s review some excerpts from the Google documentary Trillions of questions, no easy answers.

Cathy Edwards, head of User Trust for Search: “Spam is one of the biggest problems that we face… As an example, 40% of pages that we crawled in the last year in Europe were spam pages. This is a war that we’re fighting, basically”.

This is fascinating, 40% of pages are spam! Google needs to constantly craft new and new ways to detect spam on a scale of trillions of pages.

I doesn’t take long to realize that users don’t want to see spam, what they want to see are high quality pages. So what is a simple mechanism to filter spam? Find sites that you can trust. In other words, the metric which is opposite to spam is Authority. If a page has a high PageRank, many other pages link to it, and it’s unlikely to be spam. Looking at it from another perspective, this page has high authority. Because Google only needs to return a few dozen top blue links, it would be much easier to return them from high quality, high authority, trusted pages, rather than trying to filter spam from trillions of pages.

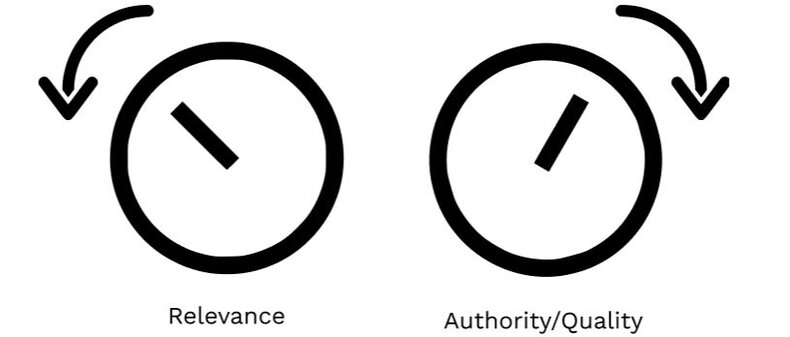

Relevance and Page Quality (Authority or E-E-A-T) are contrary metrics. When you increase the importance of one metric, for example Page Quality, you may end up returning slightly less relevant results in SERP. This is visualized on the following picture as two knobs, one can turn to regulate the importance of each factor.

Obviously, for YMYL (Your Money Your Life) queries, this Authority/Quality part is more important, as Google tries to avoid legal and moral risks caused by displaying information that could hurt people.

Low Quality Sites and Google Reputation Risks

Let’s get back to the documentary.

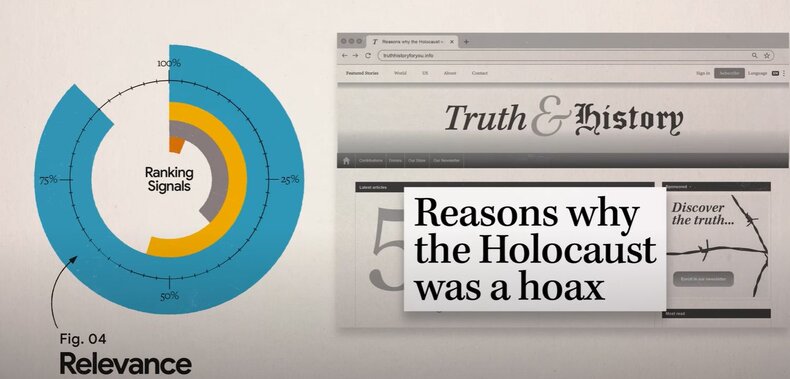

Meg Aycinena Lippow, Software Engineer, Search: “Every query is going to have some notion of relevance and each one’s going to have some notion of quality. And we are constantly trying to trade off which set of results balances those two the best”.

They gave this example in the documentary, Google embarassed itself once by displaying the result that said that The Holocaust never happened. The query used was “Did The Holocaust happen”. The problem was that high quality websites didn’t bother to say explicitly on the page that it did happen. They talked about The Holocaust in general. But low quality conspiracy theory websites explicitly said The Holocaust never happened. They perfectly matched the search query, and were recognized by Google as being more relevant.

What happened was that the relevance signals were overpowering the quality signals.

Image source https://www.youtube.com/watch?v=tFq6Q_muwG0

Pandu Nayak, VP Search: “We have long recognized that there’s a certain class of queries, like medical queries, like finance queries. In all of these cases, authoritative sources are incredibly important. So we emphasize expertise over relevance in those cases.”

For YMYL queries, Google turns this Authority/Quality knob a bit, surfacing less relevant, but more trusted and authoritative pages.

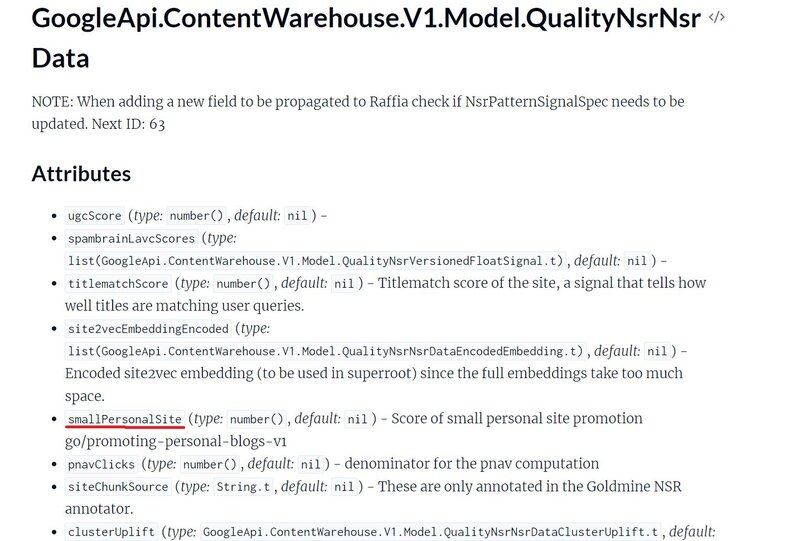

Now imagine, a lot of people started creating automated AI content which is not very helpful to people. How will Google respond? They will create a classifier for AI content, a new signal, and put more emphasis on it. At the same time, it could be that the importance of Authority/Quality factors will go up a bit in general. What could happen then is that small niche sites that don’t have strong quality signals would drop in rankings. But who cares about small websites? Unless a lot of people start to complain. It looks like Google does. In the QualityNsrNsrData module there is a field called “smallPersonalSite”. Some people tie it to Google not liking small sites, but I think that’s the opposite. Google is trying to foster search results diversity, including smaller sites that haven’t had a chance yet to build quality signals compared to larger sites.

In conclusion

Because Relevance and Authority/Quality contradict each other, Google Search is by design incapable of producing perfect results. This design choice was motivated by the need to fight spam and misinformation, technologies inability to understand content at a human level, and constantly changing information (new knowledge, news and opinion is constantly being created). This means that there will always be people upset by the next Google core algorithm update (when the importance of different ranking factors changes).